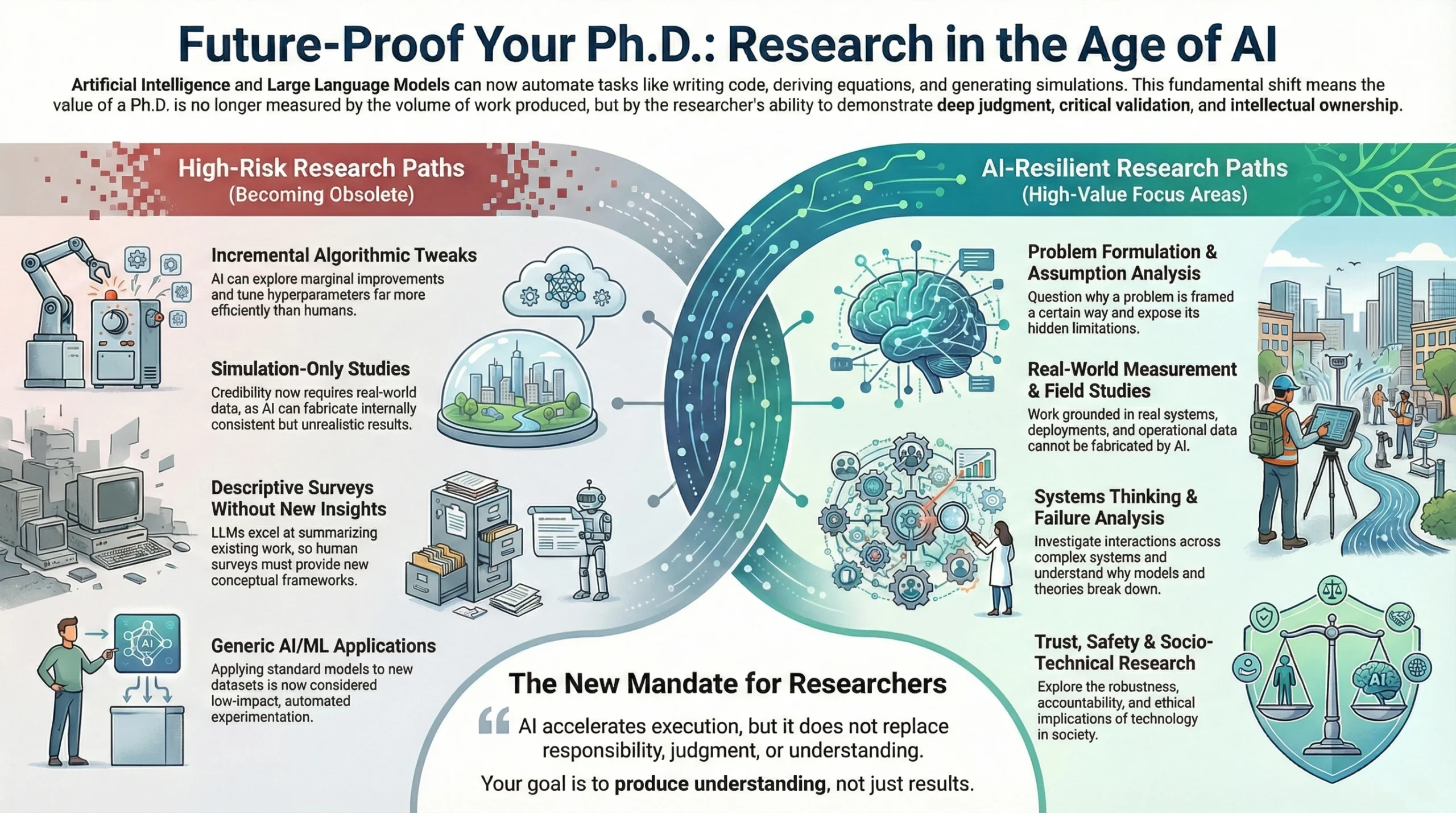

The rapid advancement of Artificial Intelligence (AI) and Large Language Models (LLMs) has fundamentally altered the landscape of research in Computer Science, Electronics, and Information Technology. Tasks that once required significant intellectual and manual effort–such as writing technical papers, deriving equations, implementing algorithms, and generating experimental results–can now be partially or fully automated. While this transformation has increased productivity, it has also challenged traditional notions of originality, novelty, and scholarly contribution.

As a result, many classical research directions that were once considered suitable for Ph.D.-level investigation are losing relevance, while new expectations are emerging around problem formulation, validation, systems thinking, and experimental credibility. This article examines how the AI era is reshaping doctoral research in CSE, ECE, and IT by identifying research areas that are becoming weak or high-risk, highlighting domains that remain resilient to automation, and clarifying how research quality and impact are likely to be evaluated in the coming years. The goal is to help future researchers make informed, future-proof decisions when planning their Ph.D. research in an AI-dominated academic environment.

The goal of this article is to clearly state which classical research directions are no longer strong Ph.D candidates in the AI era and why, and which directions remain resilient.

1. The Fundamental Reality of the AI Era

Artificial Intelligence has dramatically reduced the cost of producing technical content, but it has not reduced the difficulty of producing trustworthy knowledge.

In today’s research ecosystem, AI systems can effortlessly generate grammatically flawless papers, mathematically consistent equations, executable code, synthetic datasets, and visually compelling figures. As a result, outputs that once required months of effort can now be produced in minutes, fundamentally changing how originality and expertise are perceived.

Consequently, the value of a Ph.D is no longer tied to how much one can produce, but to whether the researcher can demonstrate judgment, insight, validation, and responsibility for the ideas being presented.

2. Structural Changes in How Research Is Judged

Before the AI/LLM Era

- Writing technically dense papers was seen as evidence of expertise, since technical expression itself required deep familiarity with the subject.

- Long mathematical derivations were often accepted as indicators of originality, even when built on well-known assumptions.

- Simulation results were frequently trusted if they were internally consistent and visually clean.

- Incremental extensions of existing methods were usually sufficient to establish novelty.

After the AI/LLM Era

- Large language models can now autonomously generate entire manuscripts, including equations, pseudo-code, experiment sections, and references, with convincing coherence.

- AI can rapidly re-derive known mathematics, implement algorithms, and explore large design spaces.

- Therefore, none of these outputs alone can reliably demonstrate that a human researcher has contributed something fundamentally new.

The emphasis has shifted from presentation and volume to conceptual ownership, defensibility, and intellectual accountability.

3. Research Directions That Are Becoming Weak or High-Risk

These areas are not forbidden, but they are poor Ph.D choices unless pursued with exceptional depth, realism, or foundational innovation.

❌ 1. Incremental Algorithmic Tweaks

Research focused on marginal improvements—such as slightly modifying an algorithm, tuning hyperparameters, or adding heuristic rules—has lost much of its academic strength.

Since AI systems can automatically search these design spaces far more efficiently, it is increasingly difficult to argue that such work represents deep human insight rather than automated optimization.

❌ 2. Descriptive Surveys Without Intellectual Synthesis

Survey papers that merely summarize, categorize, or list existing work without providing a new conceptual framework, historical reinterpretation, or critical unification are rapidly losing relevance.

Because LLMs already excel at summarization, human-authored surveys are now expected to deliver theory-building, not literature aggregation.

❌ 3. Simulation-Only or Tool-Only Studies Without Reality Checks

Research that relies exclusively on simulations, benchmarks, or synthetic datasets—especially under idealized assumptions—faces increasing skepticism.

AI can fabricate perfectly smooth curves and internally consistent results, making credibility and validation more important than polish.

❌ 4. Generic AI / ML Applications

Applying standard AI models to new datasets or slightly modified problem statements, without addressing deeper theoretical, systemic, or societal implications, has become an overcrowded and low-impact path.

Without a strong problem formulation, such work is indistinguishable from automated experimentation.

❌ 5. Equation-Centric or Framework-Centric Work Without Interpretation

Research that focuses on formalism—equations, architectures, or frameworks—without clearly explaining assumptions, limitations, or real-world relevance is increasingly undervalued.

AI can generate formal structures, but it cannot reliably assess their meaning or applicability.

4. Classical AI-Era Research Topics That Are No Longer Strong Ph.D Candidates

This section explicitly lists traditional, once-popular research topics across CSE, ECE, and IT that are now weak as standalone Ph.D themes in the AI era.

4.1 Image Processing & Computer Vision

❌ Weak or Obsolete as Primary Ph.D Topics

- Classical edge detection and filtering comparisons (Sobel, Prewitt, Canny, median, Wiener) without new theoretical or application context.

- Histogram-based enhancement, denoising, or sharpening evaluated using PSNR/SSIM alone.

- Hand-crafted feature pipelines (SIFT, SURF, HOG, LBP) without integration into modern systems.

- Classical segmentation methods (thresholding, region growing, watershed) studied in isolation.

- Feature extraction + SVM / k-NN classification pipelines.

Reason:

These techniques are mature, extensively documented, and easily surpassed by modern AI-based methods or automated tools, offering little room for original contribution.

4.2 Data Mining & Classical Machine Learning

❌ Weak or Obsolete as Primary Ph.D Topics

- Association rule mining using Apriori or FP-Growth with minor optimizations.

- Clustering comparisons (k-means, hierarchical, DBSCAN) on standard datasets.

- Classical feature selection using entropy, correlation, or mutual information.

- Predictive modeling using Naive Bayes, Decision Trees, or Random Forests without theoretical grounding.

- Accuracy-based benchmarking on UCI-style datasets.

Reason:

AI systems can now automate feature engineering, model selection, and evaluation, making such studies insufficiently novel unless they challenge fundamental assumptions or data regimes.

4.3 Networking & Communication Systems

❌ Weak or Obsolete as Primary Ph.D Topics

- Basic routing protocol comparisons under fixed traffic models.

- TCP variant performance evaluation using default simulators.

- Rule-based MAC scheduling tweaks without architectural impact.

- QoS-based routing without deployment or measurement realism.

- Classical queueing-theory models without empirical validation.

Reason:

These problems have been studied for decades, and AI-driven optimization can now explore such design spaces automatically.

4.4 Information Technology (IT) & Software-Centric Domains

This is where many Ph.D aspirants are currently at the highest risk in the AI era.

❌ Software Engineering

- Code clone detection using classical metrics.

- UML-based design optimization without empirical grounding.

- Software metrics correlation studies (LOC, cyclomatic complexity).

- Bug prediction using classical ML on open-source datasets.

Reason:

AI-powered code analysis tools already outperform many academic approaches, making incremental studies uncompetitive.

❌ Databases & Information Systems

- Query optimization using heuristic or cost-model tweaks.

- Indexing strategies without new workload models.

- Transaction scheduling comparisons under synthetic workloads.

- Classical data warehousing or OLAP performance studies.

Reason:

Modern database systems already incorporate adaptive, AI-driven optimization, reducing the novelty of such research.

❌ Web Technologies & Enterprise IT

- Web service composition using rule-based methods.

- QoS-aware service selection without real deployment constraints.

- Enterprise workflow optimization using static models.

- E-commerce recommendation systems using classical collaborative filtering.

Reason:

These problems are now largely solved in practice using large-scale AI systems and real-time analytics.

❌ Cloud Computing & Distributed Systems (Classical)

- VM placement using heuristic scheduling.

- Load balancing with rule-based algorithms.

- Static autoscaling policies.

- Resource allocation without real cost, energy, or failure modeling.

Reason:

Cloud platforms already use AI-driven orchestration, making classical approaches obsolete unless deeply rethought.

4.5 Cybersecurity (Classical Approaches)

❌ Weak or Obsolete

- Signature-based intrusion detection comparisons.

- Rule-based anomaly detection.

- Static malware classification using handcrafted features.

- Attack taxonomy papers without threat evolution analysis.

Reason:

Attack patterns evolve faster than static models, and AI-based systems dominate practical defenses.

5. Research Areas That Remain Strong and AI-Resilient

These areas exploit human judgment, contextual reasoning, and responsibility, which AI lacks.

✅ Problem Formulation and Assumption Analysis

Research that questions why problems are framed a certain way, identifies hidden assumptions, or exposes overlooked constraints remains extremely valuable.

✅ Systems and End-to-End Thinking

Research that studies interactions across layers, components, organizations, or domains reveals emergent behaviors beyond isolated optimization.

✅ Measurement, Experimentation, and Field Studies

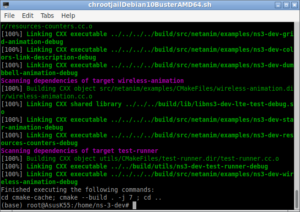

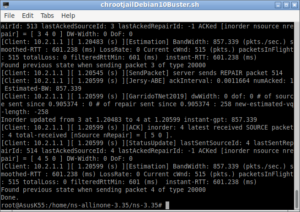

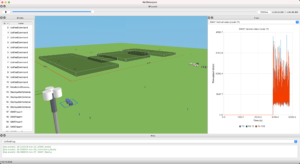

Work grounded in real systems, deployments, measurements, and operational data is inherently resistant to AI fabrication.

✅ Validation, Failure Analysis, and Negative Results

Understanding where models break and why assumptions fail is increasingly important as AI-generated optimism rises.

✅ Infrastructure, Platforms, and Tool Building

Datasets, simulators, frameworks, benchmarks, and reproducibility pipelines provide long-term scientific value.

✅ Interdisciplinary and Socio-Technical Research

Research combining computing with law, policy, medicine, economics, or ethics requires judgment and responsibility beyond AI capability.

✅ Trust, Safety, and Verification

Research on robustness, explainability, accountability, security, and correctness of AI and IT systems is growing in importance.

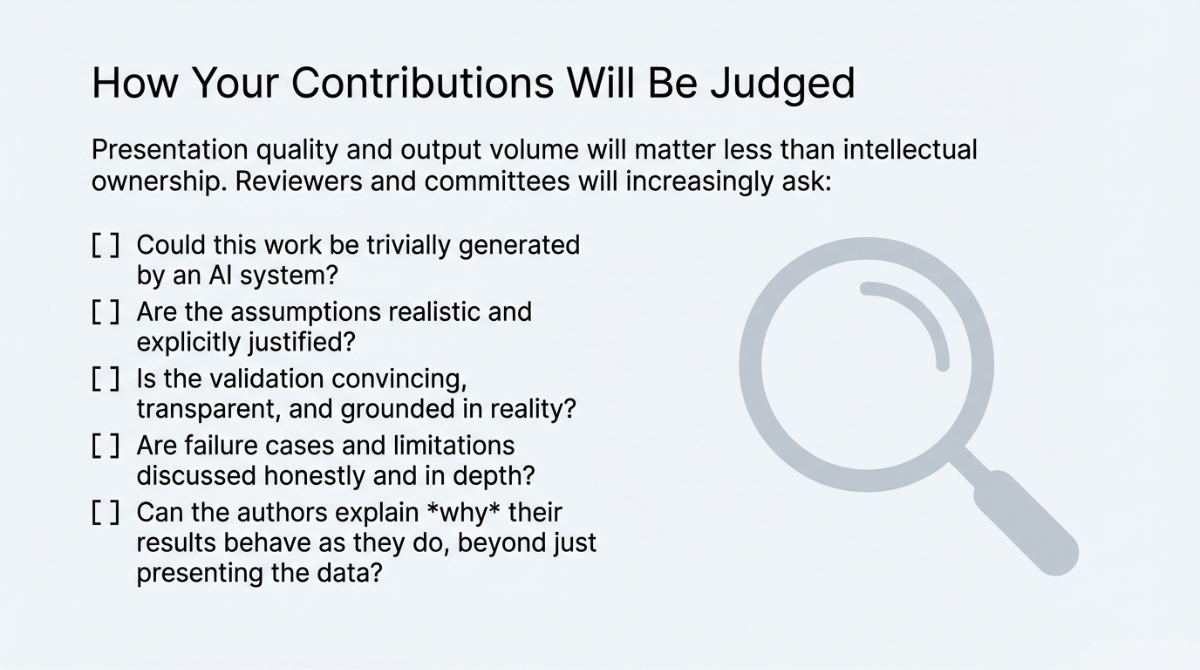

6. How Publications Will Be Evaluated Going Forward

Reviewers will increasingly ask:

- Could this work be trivially generated by an AI system?

- Are assumptions realistic and justified?

- Is validation convincing and transparent?

- Are failure cases discussed honestly?

- Can the authors explain why results behave as they do?

Presentation quality will matter less than intellectual ownership.

7. How a Future Ph.D Researcher Should Plan

- Choose problems that resist automation and brute-force optimization.

- Focus on building durable artifacts, not just papers.

- Prioritize depth and defensibility over publication count.

- Prepare for stronger scrutiny during reviews and defenses.

8. Final Takeaway

AI accelerates execution, but it does not replace responsibility, judgment, or understanding.

The successful Ph.D researcher in the AI era will:

- Think more carefully than AI

- Validate more rigorously than AI

- Question assumptions more deeply than AI

- Build knowledge that cannot be generated by prompting alone

Those who adapt to this reality will shape the future of research—rather than be displaced by it.

9. Conclusion

In the era of AI and large language models, the landscape of Ph.D. research in CSE, ECE, and IT is undergoing a fundamental transformation. Traditional indicators of scholarly capability—knowledge slightly above average, execution skill, and publication volume—are no longer sufficient to establish originality or intellectual contribution. AI can generate papers, code, simulations, and analyses, making superficial outputs common and easily replicable. Consequently, modern research must prioritize human judgment, critical reasoning, and a deep understanding of assumptions, limitations, and system behaviors. Scholars must focus on research areas where AI cannot replace intuition, insight, and conceptual foresight, such as system-level design, modeling under uncertainty, failure analysis, reproducibility, and methodological rigor. Success in this environment requires deliberate problem selection, careful experimentation, transparent validation, and defensible interpretation. Ultimately, the true measure of a Ph.D. will be the scholar’s ability to produce understanding, not just results, demonstrating that human intellect can navigate complexity and uncertainty in ways AI alone cannot replicate.

Discuss Through WhatsApp

Discuss Through WhatsApp

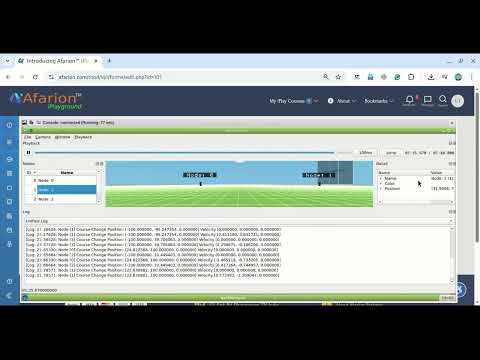

Take Me to Afarion ns-3 iPlayground

Take Me to Afarion ns-3 iPlayground