A. Introduction.

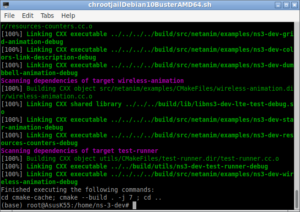

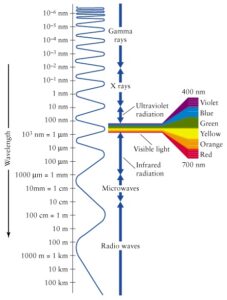

Definitely, we are not talking about ‘paperware’ that you see near to this paragraph!.

Definitely, we are not talking about ‘paperware’ that you see near to this paragraph!.

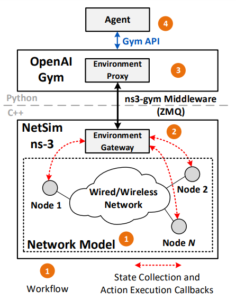

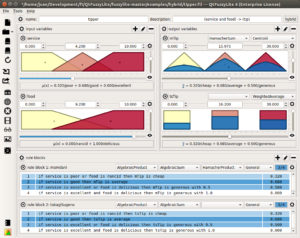

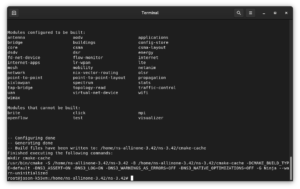

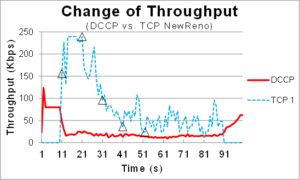

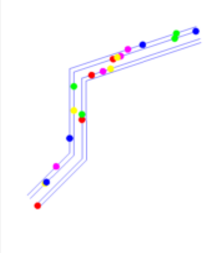

Most of the published journal papers only talk about some imaginary thing. If the research is using a simulation tool such as ns-3 to implement a proof of concept model then always that implementation is questionable.

During a discussion on ns-3 user group, one of the ns-3 developer ‘Tommaso Pecorella’ called the software implementation of the algorithm explained in such paper as “paper-ware”.

I do know the origin of the word ‘paper-ware’ – For the first time, I saw that word in Tommaso Pecorella’s message on ns-3 user group. I believe that “paper-ware” is an excellent name for such software/model that is discussed in most of the journal papers – they can only live in a journal paper – not in the real world.

In fact, always I had an inexpressible feeling of understanding on ‘unimplementable, impractical ideas’ commonly found in journal papers – particularly in Computer Science, Electronics and Communication Engineering related ones. But I had no simple words to express it with suitable words. ‘Paper-ware’ is an excellent single word that will express everything – (-: thank you Tommaso 🙂 .

After knowing about the word ‘paper-ware’, I searched ‘google’ to read some articles on ‘paper-ware’. But, what I found mostly on ‘google’ was: items made of paper similar to the one you see here – the ‘paperware‘. Even Wikipedia itself only know about ‘paperware’ and knows nothing about ‘paper-ware’.

As a last hope, yesterday, I decided to learn more about paper-ware by asking some questions with an AI system. Amazingly, that AI system taught me more about ‘paper-ware’.

Most students and scholars and researchers in general try to collect LOT of reference papers during the initial stage of their research. Even, they select one paper with ‘so called’ novel idea that is described in the paper and spend several months or even years without realizing it as a ‘paper-ware’.

A lot of ‘paper-ware’ can be easily identified in published journal papers if the authors mention the use of simulators such as ns-2 and ns-3 for implementing their model or algorithm if you are aware of ‘paper-ware’. My intention of posting this article here is: I often found students and researchers seeking answers for implementing a paper-ware and meaninglessly spending their time and effort on it. Generally, that kind of effort on implementing a paper-ware work will just end as another paper-ware.

The intention of this Article is to help researchers to identify a ‘catching idea /model’ described in a journal paper, whether it is a ‘paper-ware’ or not. But anyway, identifying it will need a lot of skills (later, we may discuss it at the end of this article – after getting some feedback from the community). But for now, here, I try to present the knowledge that AI taught me.

B. Paper-ware – Definition.

While publishing research is an important part of scientific careers, the focus should always be on producing high-quality, impactful research that advances the field and contributes to the broader scientific community’s understanding of a particular topic. Publishing paper-ware, on the other hand, is generally seen as a disingenuous and ultimately self-defeating practice.

a) Paper-ware in the Domain of AI and Networking.

- Over-hyped research papers: Some research papers in AI and networking may present over-hyped claims or unrealistic results that are difficult to reproduce or validate. For instance, a paper might claim to have developed a new AI algorithm that significantly outperforms state-of-the-art methods, but when other researchers attempt to replicate the results, they find that the performance gains are not as significant as claimed.

- Insignificant improvements: Some papers may propose small or incremental improvements to existing algorithms or techniques, without providing much insight or impact in the field. For instance, a paper might propose a slight modification to an existing AI model that improves its accuracy by only a few percentage points, but without offering any significant advantage over existing techniques.

- Lack of practical application: Some research papers in AI and networking may focus on theoretical or abstract concepts that have little practical application or relevance. For example, a paper might describe a new neural network architecture that is highly complex and difficult to implement in practice, or a networking protocol that is not suitable for real-world applications.

- Low-quality or incomplete research: Some papers may be rushed or poorly executed, lacking rigor or statistical analysis. For example, a paper might have a small sample size or insufficient experimental setup, making it difficult to draw meaningful conclusions from the results.

It’s important to note that not all papers that fall into these categories are necessarily paper-ware, as there may be valid reasons for proposing incremental improvements or focusing on theoretical concepts. However, when the primary goal is to simply pad a researcher’s publication record or CV, without providing significant value or impact in the field, then the research can be considered as paper-ware.

b) Paper-ware in the Domain of Optimization Techniques in Networking.

Nature-inspired optimization techniques have gained popularity in networking research, and as with any other field, paper-ware can be found in this area as well. Here are a few examples of what could be considered paper-ware in the domain of nature-inspired optimization techniques in networking:

- Lack of comparison with existing methods: A paper may present a new optimization technique inspired by nature, but without comparing it to existing methods in the field. This makes it difficult to determine the relative performance of the proposed method, and whether it offers any significant advantage over existing techniques.

- Overly simplistic evaluation: Some papers may propose a new optimization technique, but only evaluate it using a small, simple testbed or dataset. This may not be sufficient to show the practical relevance or scalability of the proposed method.

- Lack of impact: Some papers may propose a new optimization technique, but fail to demonstrate its impact in real-world networking scenarios or applications. This can make it difficult to determine the practical relevance of the proposed method, and whether it offers any significant advantage over existing techniques.

- Over-exaggerated results: Some papers may present unrealistic or over-exaggerated results for the proposed optimization technique. For example, a paper might claim that the proposed method significantly outperforms existing techniques in terms of performance, but the results may not be statistically significant or may not be reproducible.

- Lack of theoretical foundation: Some papers may propose new optimization techniques without providing a solid theoretical foundation or justification for the proposed approach. This can make it difficult to evaluate the validity and generalizability of the proposed method.

It’s important to note that not all papers that fall into these categories are necessarily paper-ware, as there may be valid reasons for the approach taken or for the limitations of the evaluation. However, when the primary goal is to simply pad a researcher’s publication record or CV, without providing significant value or impact in the field, then the research can be considered as paper-ware.

Discuss Through WhatsApp

Discuss Through WhatsApp