Why Doing “Unique” Research Has Become Harder in the Age of Intelligent Machines

A Critical Analysis of the Changing Nature of Ph.D. Research

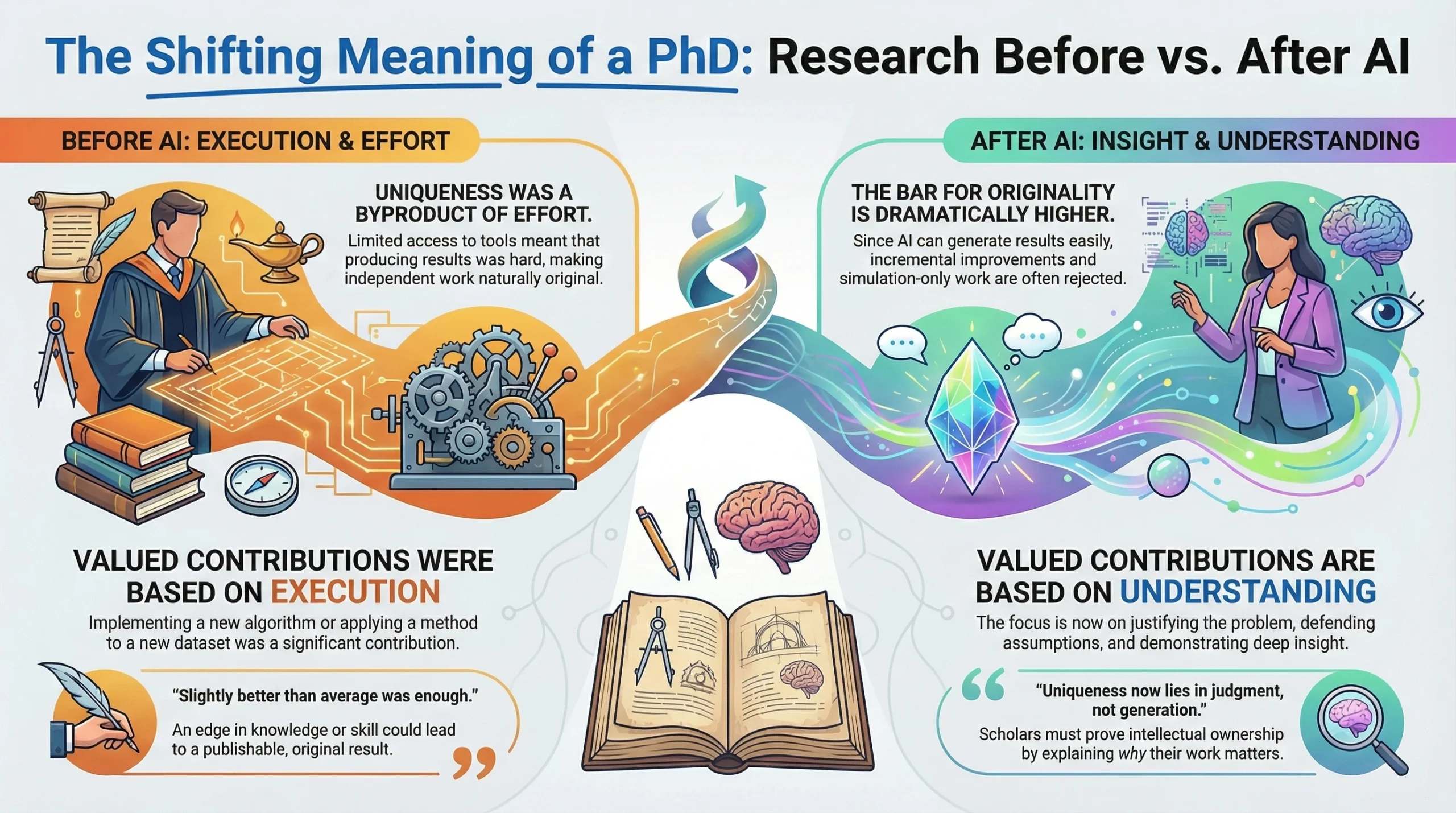

In previous decades, conducting high-quality research often required only a moderate edge in knowledge or skill over one’s peers. Access to literature was limited, experiments were time-consuming, and generating results demanded significant human effort, which naturally validated the originality and value of a scholar’s work. Today, the landscape of research has changed fundamentally due to the rise of Artificial Intelligence (AI) and Large Language Models (LLMs). Machines can now generate technical content, derive equations, simulate experiments, and even draft research manuscripts, often in minutes. This technological capability has disrupted the traditional signals of scholarly competence: producing results or polished outputs is no longer sufficient to demonstrate true understanding. Modern Ph.D. scholars must now go beyond execution and computation to prove intellectual ownership, judgment, and insight. The challenge lies not in generating results but in producing genuine understanding, critically evaluating assumptions, identifying meaningful contributions, and defending decisions rigorously. This article explores why achieving uniqueness in research has become substantially harder in the AI era and what it means for the future of doctoral scholarship.

1. 🕰️ Research in Previous Decades: A Different Intellectual Landscape

In earlier decades, the academic research ecosystem operated under human-only intelligence constraints.

- 🧠 Knowledge acquisition was slow and fragmented

- 📚 Literature surveys required weeks or months

- 💻 Implementation demanded strong hands-on expertise

- ✍️ Writing itself was a non-trivial intellectual task

A researcher with knowledge slightly above the average of their peers could:

- ✅ Identify a research gap

- ✅ Implement a solution independently

- ✅ Produce results that were naturally unique

- ✅ Publish with reasonable confidence of originality

📌 Uniqueness emerged organically, not because the work was extraordinary, but because few people could reach the same point at the same time.

2. 🤖 The AI Disruption: Intelligence Has Become Ubiquitous

The arrival of AI and LLMs fundamentally changed this equilibrium.

Today, AI systems can:

- 🤖 Generate research ideas and problem statements

- 📐 Derive equations and explain models

- 💻 Write simulation code and scripts

- 📊 Produce graphs, tables, and statistical analyses

- ✍️ Draft full-length journal papers

This has created a new reality:

- ❌ Knowledge slightly above average is no longer sufficient

- ❌ Execution skill alone does not guarantee originality

- ❌ Speed and polish no longer indicate depth

📌 Intelligence has been commoditized, and uniqueness has become scarce.

3. ⚠️ The Shift in the Meaning of “Original Contribution”

Earlier Definition of Contribution

- ✅ Implementing a new algorithm

- ✅ Applying an existing method to a new dataset

- ✅ Improving performance metrics marginally

Current Definition of Contribution (AI Era)

- 🔍 Justifying why the problem matters

- 🧩 Defining assumptions others did not question

- 🧠 Demonstrating insight, not just outcomes

- 🛠️ Designing systems, not just running tools

❗ What AI can easily do is no longer valued as a Ph.D. contribution.

4. 📉 Why Even Intelligent Researchers Must Work Harder Today

Even highly capable scholars now face new pressures:

- 🔍 Reviewers assume AI assistance by default

- 🧪 Experiments are expected to be more exhaustive

- 📖 Literature awareness is assumed to be near-complete

- 🧠 Conceptual clarity is tested aggressively

As a result:

- ❌ “Good enough” ideas fail to impress

- ❌ Incremental improvements are often rejected

- ❌ Simulation-only or implementation-only work is devalued

📌 The baseline expectation has risen dramatically, not because humans became weaker, but because machines became stronger.

5. 🧪 Validation Has Become More Important Than Novelty

In the AI era:

- 📈 Generating results is easy

- 🔬 Proving they are meaningful is hard

Modern evaluation focuses on:

- ✅ Assumption validity

- ✅ Sensitivity and robustness analysis

- ✅ Reproducibility and transparency

- ✅ Failure modes and limitations

❌ Papers that avoid discussing limitations

❌ Over-polished results without explanation

are now treated with suspicion.

6. 🧑🎓 The Psychological Burden on Modern Research Scholars

AI has also introduced non-technical challenges:

- 😓 Constant self-doubt about originality

- 😟 Fear of being accused of AI overuse

- ⏳ Pressure to outperform machines

- 🤯 Cognitive overload due to infinite possibilities

📌 Unlike earlier generations, today’s scholars must prove intellectual ownership, not just intellectual effort.

7. 🔍 How Examiners and Reviewers Now Judge Research

During reviews and final defenses, evaluators increasingly ask:

- ❓ Why did you frame the problem this way?

- ❓ Which assumptions are yours, and which are inherited?

- ❓ What would fail if one condition changes?

- ❓ What did YOU learn that the AI did not tell you?

✅ Scholars who deeply understand their work survive

❌ Scholars who relied on AI for thinking are exposed quickly

8. 🧭 What Still Makes Research “Uniquely Human”

Despite AI’s power, some aspects remain difficult to automate:

- 🧠 Long-term curiosity and persistence

- 🧩 System-level reasoning across domains

- ⚖️ Engineering judgment under uncertainty

- 🧪 Designing meaningful experiments

- 🗣️ Defending ideas under questioning

📌 Uniqueness now lies in judgment, not generation.

9. 🔴 Final Reality Check

🔴 The era where “slightly better than average” was enough is over

🟢 The era of deep understanding, defensible assumptions, and intellectual ownership has begun

AI has not killed research.

❌ It has killed lazy research

❌ It has killed formulaic research

❌ It has killed unquestioned assumptions

✅ It rewards thinkers

✅ It rewards builders

✅ It rewards explainers

10. ✨ Concluding Thought

Producing Understanding in an Age Where Machines Produce Results

In the age of intelligent machines, producing results has become increasingly inexpensive, fast, and automated. Simulations can be executed at scale, models can be trained in hours, equations can be derived symbolically, and entire manuscripts can be drafted with minimal human intervention. As a consequence, results themselves no longer serve as reliable evidence of intellectual contribution. What once took months of effort can now be replicated—or even surpassed—by an algorithm in minutes.

This shift fundamentally alters the meaning of a Ph.D.

A doctoral degree was never intended to certify one’s ability to generate outputs; rather, it was meant to demonstrate mastery of a domain at a level where the scholar can reason beyond tools, question assumptions, and explain phenomena with authority. In earlier eras, the act of producing results implicitly demonstrated understanding because few had the capability to do so. Today, that implication no longer holds. Machines can generate correct-looking answers without comprehension, coherence, or accountability.

True doctoral scholarship now lies in producing understanding—an outcome that remains stubbornly human.

Producing understanding means being able to articulate why a problem is worth studying, not merely how to solve it. It requires identifying the assumptions embedded within models and recognizing when those assumptions no longer reflect reality. It involves tracing causal relationships rather than reporting correlations, and explaining not only why a system performs well under certain conditions but also why it fails under others.

Understanding also manifests as judgment—the ability to decide which parameters matter, which results are meaningful, and which improvements are illusory. A machine may optimize metrics, but it cannot judge relevance. It cannot decide whether a 2% gain matters in a real deployment, whether a model generalizes beyond its testbed, or whether a simulation captures the essence of a physical system. These decisions demand experience, context, and intellectual responsibility.

Furthermore, producing understanding requires narrative coherence across time. A Ph.D. scholar must demonstrate sustained engagement with a problem, showing how their thinking evolved, how earlier assumptions were refined or discarded, and how insights accumulated into a coherent body of knowledge. This longitudinal intellectual growth cannot be generated on demand by AI; it must be lived through years of questioning, failure, and refinement.

Most importantly, understanding is what survives scrutiny. During peer review or a final viva voce examination, polished results and elegant plots quickly lose their power if the scholar cannot defend them. Examiners do not test the outputs—they test the mind behind the outputs. In that setting, only genuine understanding provides resilience. Memorized explanations, AI-generated phrasing, or surface-level familiarity collapse under probing questions, while deep comprehension reveals itself through clarity, confidence, and adaptability.

In this new academic reality, the value of a Ph.D. is no longer measured by the number of figures, tables, or publications produced, but by the scholar’s demonstrated ability to think independently in a world where thinking can be simulated. The doctoral journey is thus transformed: from a race to generate results into a disciplined pursuit of insight.

Results can be copied.

Understanding must be earned.

This is the enduring purpose of the Ph.D. in the age of intelligent machines—and the standard by which future scholars will ultimately be judged.

Discuss Through WhatsApp

Discuss Through WhatsApp

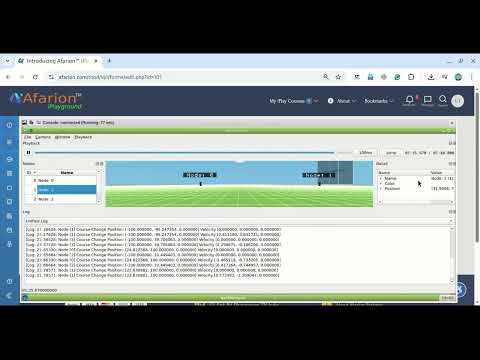

Take Me to Afarion ns-3 iPlayground

Take Me to Afarion ns-3 iPlayground