Ph.D. Research Using ns-3 in the Age of AI and Large Language Models

Choosing the Right Problems, Conducting Defensible Research, and Surviving Evaluation & Defense

The emergence of Artificial Intelligence (AI) and Large Language Models (LLMs) has significantly transformed the way research in Computer Science, Electronics, and Information Technology is conducted and evaluated. Tasks that were once central to doctoral research—such as protocol design, simulation scripting, data analysis, and technical writing—can now be partially automated, raising new concerns about originality, depth, and intellectual ownership. In this evolving academic landscape, simulation-based research using tools such as ns-3 faces increased scrutiny, particularly when contributions are limited to incremental performance evaluations or parameter tuning. However, ns-3 remains a powerful and relevant platform when used to address fundamentally hard problems involving system modeling, validation, scalability, and real-world constraints. This article examines how Ph.D. scholars can effectively employ ns-3 as a primary research tool in the age of AI by selecting defensible research problems, adopting rigorous modeling and experimental practices, and aligning their contributions with contemporary expectations of novelty and credibility. The discussion aims to guide researchers toward future-proof doctoral work that emphasizes understanding, judgment, and system-level insight over mere result generation.

1. Context: Why ns-3-Based Ph.D. Research Is Now More Difficult Than Before

In previous decades, research difficulty was bounded by human capacity. A scholar with knowledge slightly above average could identify a gap, implement a solution, simulate it, and publish results with reasonable confidence that the work was novel.

Today, the situation has fundamentally changed:

- AI can generate algorithms, simulation scripts, equations, graphs, and even paper drafts within minutes.

- Reviewers, supervisors, and examiners assume AI assistance by default.

- The burden of proof has shifted from “what you implemented” to “why this could not have been auto-generated”.

As a result:

- ❌ Incremental ns-3 simulations are no longer sufficient

- ❌ Reproducing known protocols with minor parameter tuning is considered weak

- ❌ “Simulation-only papers” without theoretical or system-level depth are increasingly rejected

This does not mean ns-3 research is dead. Instead, the nature of acceptable ns-3 research has changed.

2. ❌ ns-3 Research Areas That Are Weak or High-Risk in the AI Era

The following areas are dangerous choices for a modern Ph.D. because they are either AI-replicable, saturated, or intellectually shallow.

❌ Pure Performance Comparison Studies

- Comparing throughput, delay, or packet loss of existing protocols under slightly different parameters

- Running simulations without proposing new abstractions or models

👉 Why risky:

AI can already generate such simulations and plots automatically, making it hard to prove originality.

❌ Minor Variants of Existing Protocols

- Small tweaks to AODV, TCP, LTE schedulers, or LoRaWAN parameters

- “Improved version” papers without fundamental changes

👉 Why risky:

Reviewers often ask: “Why is this not just parameter tuning?”

❌ ML-for-the-Sake-of-ML in ns-3

- Adding a neural network to routing or scheduling without system justification

- Treating ns-3 merely as a data generator for ML models

👉 Why risky:

AI reviewers are now trained to detect “decorative ML”.

3. ✅ ns-3 Research Areas That Are Strong and Future-Proof

The following areas remain highly defensible, even in an AI-dominated research ecosystem.

✅ 3.1 Fundamental Modeling of Physical or Network Phenomena

Examples:

- Novel radio propagation models (5G, 6G, deep space, underwater, extreme terrain)

- Energy consumption models grounded in hardware behavior

- Cross-layer interference modeling

👉 Why strong:

- Requires domain knowledge + physics + validation

- AI cannot invent correct physical assumptions without expert guidance

✅ 3.2 Simulator Architecture & Framework Contributions

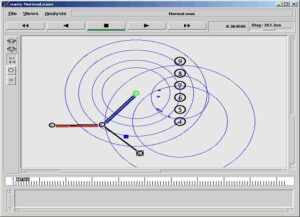

Examples:

- New ns-3 modules or abstractions

- Integration of external scientific tools (e.g., orbital dynamics, mobility engines)

- Improving scalability, accuracy, or reproducibility of ns-3

👉 Why strong:

- ❌ AI struggles with large, evolving simulator architectures

- ✅ Code-level originality is auditable and defensible

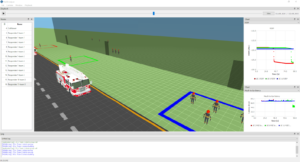

✅ 3.3 System-Level Design for Extreme or Untested Scenarios

Examples:

- 5G/6G, Deep space communication networks

- Disaster-resilient networks

- Remote border security and healthcare systems

- Non-terrestrial networks (NTN, UAV, satellite swarms)

- Providing innovative solutions to Global Trajectory Optimisation Competition(GTOC) Challenges if there exists any network communication aspect involved in the process.

👉 Why strong:

- These problems are not well-represented in public datasets

- Require engineering judgment, not just optimization

✅ 3.4 Validation-Oriented Research

Examples:

- Comparing ns-3 outputs with real-world measurements

- Sensitivity analysis across large parameter spaces

- Identifying when simulations break down

👉 Why strong:

- Reviewers value credibility over novelty

- AI-generated work rarely includes rigorous validation

4. Phases of ns-3 Ph.D. Research in the AI Era

A successful Ph.D. must now be structured deliberately, not opportunistically.

🧭 Phase 1: Problem Selection (Most Critical Phase)

✅ Ask:

- Is this problem rooted in physics, systems, or real constraints?

- Can ChatGPT solve this end-to-end? (If yes → reject)

❌ Avoid:

- “I will simulate X and see what happens”

- Overcrowded topics with hundreds of similar papers

📐 Phase 2: Modeling Before Simulation

✅ Do:

- Define assumptions explicitly

- Justify every parameter with literature or hardware constraints

- Explain why ns-3 is necessary, not just convenient

❌ Avoid:

- Jumping directly to code

- Copying example scripts without rethinking models

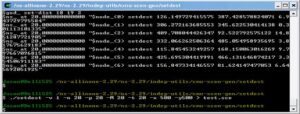

💻 Phase 3: Implementation with Traceable Originality

✅ Do:

- Write custom modules or extensions

- Maintain version control and design logs

- Show architectural diagrams of your simulator changes

❌ Avoid:

- Treating ns-3 as a black box

- Excessive reliance on existing examples

📊 Phase 4: Experimental Design (Where Many Fail)

✅ Do:

- Multi-dimensional experiments (not single plots)

- Stress testing, corner cases, failure modes

- Explain why results behave as they do

❌ Avoid:

- Cherry-picked scenarios

- Over-polished plots without explanation

📝 Phase 5: Publication Strategy in the AI Era

✅ Strong papers:

- Argue why the problem itself matters

- Focus on methodology and insight, not just numbers

- Include limitations and negative results

❌ Weak papers:

- Overuse of AI-polished language

- Vague novelty claims

- “Simulation results show improvement” without insight

📘 Phase 6: Thesis Writing Under AI Scrutiny

✅ Examiners now expect:

- Clear intellectual ownership

- Coherent narrative across chapters

- Evidence of long-term engagement with the problem

❌ Red flags:

- Overly generic explanations

- Sudden jumps in writing quality

- Inconsistent terminology

5. 🎓 Facing the Final Defense: What Actually Matters Now

In the AI era, the viva is the ultimate filter.

Examiners will test:

- ❓ Why did you choose this model?

- ❓ What breaks if this assumption is wrong?

- ❓ What would you do differently if starting again?

✅ If you truly understand your work:

- You will survive—even if AI helped with writing

❌ If you relied on AI for thinking:

- The defense will expose it within minutes

6. Final Reality Check for Future ns-3 Ph.D. Scholars

🔴 The age of “slightly above average effort” Ph.D.s is over

🟢 The age of deep, defensible, system-level thinking has begun

AI has not killed research—it has killed shallow research.

A scholar who:

- Understands models deeply

- Builds simulators thoughtfully

- Explains results honestly

- Defends assumptions rigorously

will still succeed, even in an AI-saturated academic world.

7. Conclusion

Ph.D. research using the ns-3 simulator in the age of AI and Large Language Models demands a fundamental shift in mindset, expectations, and execution. As AI systems can now generate code, results, and even research narratives with ease, the true value of doctoral work no longer lies in running simulations or producing performance graphs alone. Instead, it lies in the scholar’s ability to formulate meaningful problems, build defensible models, and explain system behavior with depth and clarity. ns-3 remains a powerful and relevant research tool, but only when it is used as an instrument for investigation rather than as a black-box experiment engine. Future-proof ns-3 research must emphasize physical realism, architectural innovation, rigorous validation, and honest discussion of limitations. Most importantly, the scholar must demonstrate intellectual ownership across all phases of research—from problem definition to final defense. In an AI-driven academic ecosystem, simulations can be reproduced, results can be regenerated, but genuine understanding, engineering judgment, and the ability to defend ideas under scrutiny remain uniquely human.

Discuss Through WhatsApp

Discuss Through WhatsApp

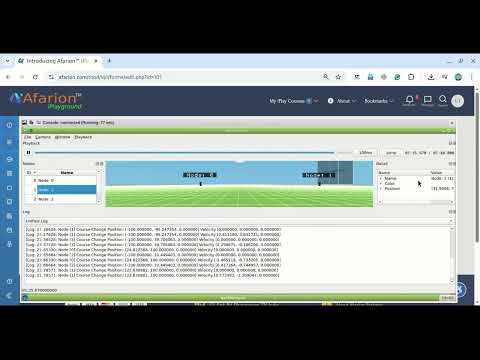

Take Me to Afarion ns-3 iPlayground

Take Me to Afarion ns-3 iPlayground